AWS Platform Guide

Upgrade Flightdeck

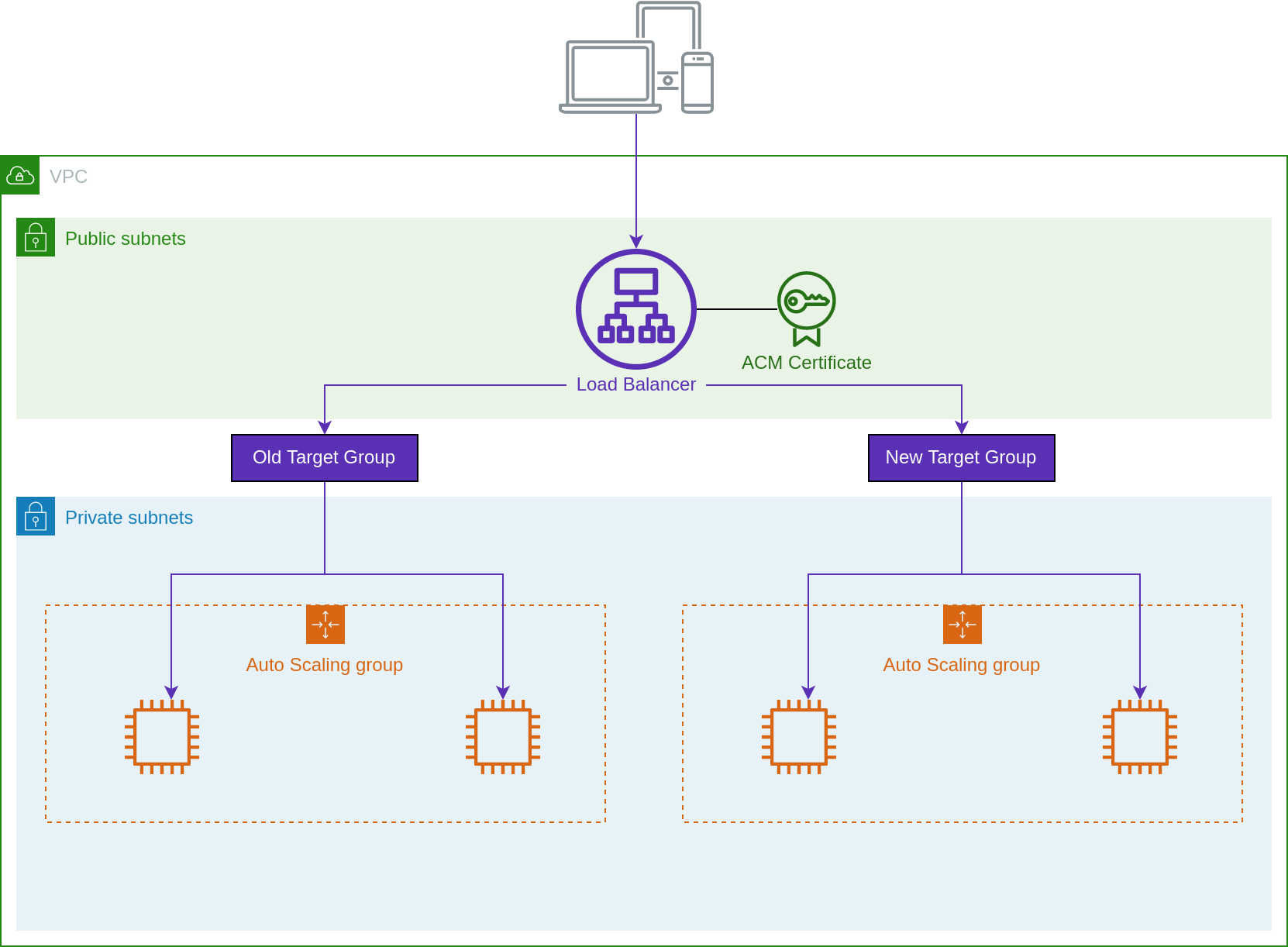

We recommend using a blue/green strategy for upgrading your cluster. Updating Kubernetes in-place can result in interruptions to running workloads. Updating Kubernetes will also frequently require updates to the Helm charts deployed to the cluster, which introduces further risk. By creating a new cluster when updating cluster components, you can ensure that workloads run properly on the new infrastructure before shifting production traffic and workloads.

Add new clusters to your network module

In order to provision a new cluster, you will need to update the tags for your VPC and other network resources so that the new Kubernetes cluster will find them.

infra/

network/

sandbox/Update your network module to include the name of the cluster you will be creating.

module "network" {

# Use the latest version of Flightdeck

source = "github.com/thoughtbot/flightdeck//aws/network?ref=v0.12.1"

cluster_names = [

# Existing cluster

"mycompany-sandbox-v1",

# Add your new cluster

"mycompany-sandbox-v2"

]

# Other configuration

name = "..."

}Apply this module to update the tags for your network resources.

Create a new sandbox cluster

This is an advanced topic for platform engineers.

Create a new root module in the cluster directory of

your infrastructure repository.

infra/

cluster/

sandbox-v2/You can copy the configuration from the previous version of your cluster, updating the versions of Flightdeck and Kubernetes. We recommend running the latest version of Kubenetes supported by EKS.

module "cluster" {

# Update this to the latest version of Flightdeck

source = "github.com/thoughtbot/flightdeck//aws/cluster?ref=v0.12.1"

# Use the name of your previous cluster and bump the version

name = "mycompany-sandbox-v2"

# Use the latest version of Kubernetes supported by EKS.

k8s_version = "UPDATE"

# Copy existing configuration for node groups

node_groups = {}

}Apply this module to provision a cluster and node groups running the latest supported versions.

Set up ingress for the new cluster

This is an advanced topic for platform engineers.

Edit the ingress stack for your sandbox clusters.

infra/

ingress/

sandbox/Add your new cluster to the list of clusters and target groups.

module "ingress" {

cluster_names = ["mycompany-sandbox-v1", "mycompany-sandbox-v2"]

target_group_weights = {

mycompany-sandbox-v1 = 100

mycompany-sandbox-v2 = 0

}

}Apply this module to create the new target group. Using the above weights, the target group will be created but will receive no traffic. We will wait until workloads are fully deployed to shift traffic.

Deploy the Flightdeck platform

This is an advanced topic for platform engineers.

Create a new root module to deploy the Flightdeck platform for the sandbox cluster using the workload platform module.

infra/

platform/

sandbox-v1You can copy the configuration for the previous version of the platform, updating the version of Flightdeck.

module "platform" {

# Use the latest version of Flightdeck

source = "github.com/thoughtbot/flightdeck//aws/platform?ref=v0.12.1"

# Copy configuration from the previous deployment

cluster_name = data.aws_eks_cluster.this.name

admin_roles = []

domain_names = []

}

data "aws_eks_cluster" "this" {

# Use the name of the cluster you created in the previous step

name = "mycompany-sandbox-v2"

}Apply this module to install the necessary components on your new cluster.

Deploy workloads to the new cluster

Update your continuous deployment workflow to deploy to your new sandbox cluster.

GitHub Actions

Update your workflow to include a job that deploys to the new cluster:

- name: Generate kube-config

run: |

aws eks update-kubeconfig --name mycompany-sandbox-v2 \

--region us-east-1

echo "KUBE_CONFIG<<EOF" >> $GITHUB_ENV

cat ~/.kube/config >> $GITHUB_ENV

echo 'EOF' >> $GITHUB_ENV

- uses: azure/k8s-set-context@v2

with:

method: kubeconfig

kubeconfig: ${{ env.KUBE_CONFIG }}

# Other deploy steps

- uses: azure/k8s-deploy@v4.2Push your updated workflow and trigger a deployment. Ensure the deployment is successful and verify that your workloads are running successfully on the new cluster.

AWS CodePipeline

Add your new cluster to your deploy project:

module "deploy_project" {

source = "github.com/thoughtbot/terraform-eks-cicd//modules/deploy-project?ref=v0.3.0"

cluster_names = [

# Existing clusters

"mycompany-sandbox-v1",

"mycompany-production-v1",

# Add your new cluster

"mycompany-sandbox-v2"

]

}Update your staging (or other pre-production stages) module to deploy to the new cluster.

module "ordering_staging" {

source = "github.com/thoughtbot/terraform-eks-cicd//modules/cicd-pipeline?ref=v0.3.0"

deployments = {

# Deployment for existing cluster

"sandbox-v1" = { ... }

# Copy the deployment and update for the new cluster

"sandbox-v2" = {

cluster_name = "mycompany-sandbox-v2"

region = "..."

role_arn = "..."

manifest_path = "..."

}

}

}Apply this module and trigger a deployment. Ensure the deployment is successful and verify that your workloads are running successfully on the new cluster.

RDS Postgres Database Upgrade

This page will summarise the steps to upgrade an RDS Postgres database using this AWS guide. You may review the referenced AWS guide as well for a more comprehensive guide on the AWS database upgrade process.

Modify the database to use a default parameter group. If a custom parameter group is in use by the database, you may get an error stating that the current parameter group is not compatible with the version you are upgrading to. To resolve this, you should modify the parameter group attached to the database to one of the default parameter group created by AWS for the current Postgres version. | For example, if the database is currently on Postgres12, you may modify the parameter group to the

default.postgres12parameter group.Confirm that the current database instance class is compatible with the Postgres version you are upgrading to. You may review the list of supported Database engines for DB instance types.

Confirm that there are no open prepared transactions using this command before commencing the upgrade. If the upgrade is initiated during off-hours period, there should be no open prepared transactions.

SELECT count(*) FROM pg_catalog.pg_prepared_xacts;Initiate a database backup before starting the database upgrade.

Have a database upgrade dry run to test the database upgrade on a non-impact environment or a duplicate of the database.

Run the

ANALYZEoperation to refresh thepg_statistictable. Optimizer statistics aren’t transferred during a major version upgrade, This operation will regenerate all statistics to avoid performance issues. You may add a verbose flag to show the progress of the operation.ANALYZE VERBOSE;See ANALYZE in the PostgreSQL documentation.

Troubleshooting issues with the Postgres database upgrade.

The database upgrade could fail with prechecks procedure due to incompatible setups or incompatible extensions. To check the cause of the interruption, you may check the Postgres logs to confirm the cause of the failure. You may review these documentation on how to retrieve Postgres logs.

- https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/USER_LogAccess.Procedural.Viewing.html

- https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/USER_LogAccess.Procedural.Downloading.html

Known database errors

- During a previous database upgrade, I experiened issues with incompatible PostGIS postgres extensions. I found the issue by reviewin the Postgres logs and I was able to upgrade the postgres extension using this guide - https://aws.amazon.com/premiumsupport/knowledge-center/rds-postgresql-upgrade-postgis/

AWS Platform Guide

The guide for building and maintaining production-grade Kubernetes clusters with built-in support for SRE best practices.

Source available on GitHub.