Inspired by some of the great talks at RailsConf 2009 such as Automated Code Quality Checking in Ruby on Rails along with Using metric_fu to Make Your Rails Code Better, this Internbot was recently tasked with getting a similar system working for the open source projects and Rails client sites that live in the thoughtbot ecosystem.

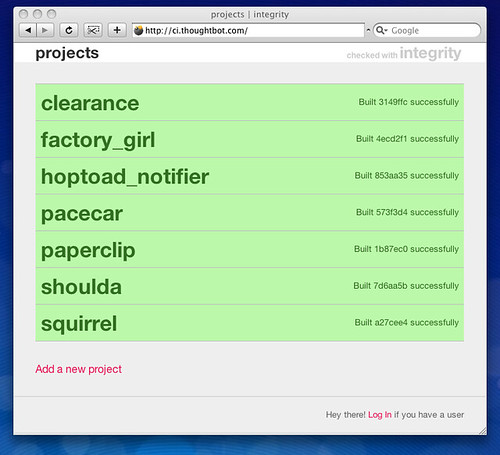

The first step of this process was to get a new CI server up an running.

thoughtbot was using CruiseControl.rb

and RunCodeRun previously, but both had fallen out of

use recently. I had some experience with setting up

Integrity, which is a much easier to use system.

Adding a new project is dead simple: plug in your project’s Git URL, give it a build script to run

(usually just rake) and boom, you’ve got CI. Integrity also supports

post-receive hooks, so when we

push commits GitHub tells Integrity to grab the latest changes and get testing.

We also make use of the Campfire

hook to let us know the

results of the build.

It was easy to get Integrity itself running, but getting the tests passing for all of our projects was no simple task. Here’s some of the gotchas that I ran into that might help you out if you’re putting together your own CI server:

- Make sure your Rails apps’ database tables exist. In hindsight, simply running

rake db:create:allwould have done the trick. - Use a Linux distro with an up-to-date version of Ruby that’s easily available. We settled on Ubuntu 8.10 with Ruby 1.8.7 patchlevel 72.

- The build script can take any system command, so use it for setting up your test environment if necessary.

- Builds don’t run in the background, so switch them to a different port if you want to be able to view your projects while builds are running.

Check out our build server at http://ci.thoughtbot.com if you want to see the status of our projects.

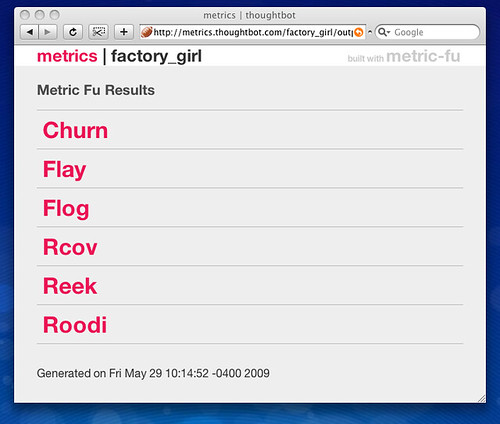

Next up was getting some metrics generated. The metric_fu project does a great job of this: with just one rake task, you can summon stats from Flay, Flog, RCov, Roodi, Reek, and more. That’s decent for one project, but for nearly 20 it doesn’t work so well. I ended up hacking a script together that does the following for each project that lives in the Integrity database:

- Runs metric_fu to generate stats

- Compares the last run to the current one to see if there’s any difference

- Alerts Campfire using Tinder if there’s changes

- Logs the run in a Google Spreadsheet using google-spreadsheet-ruby

So around 4 times daily we get updates as to how bad good our code is,

and eventually we’ll start seeing some trends in the spreadsheets. I also hacked

a custom template based on Integrity’s layout so it’s easier to browse the

metrics, which is available in my

fork. The next step is to

integrate the tools into the Integrity build script, which we’re still in the

process of deciding what

factors

will determine a failure.

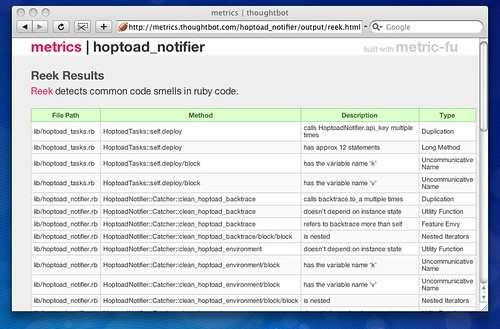

The metrics are up and available at http://metrics.thoughtbot.com if you’d like to browse around.

Both of these projects have been a lot of fun to work on. So far there’s no penalties for breaking the build, but there are plenty of NERF guns around the office if we feel the need for retaliation. The Reek and Roodi metrics results have been quite enlightening and they seem to give great suggestions for refactoring. As for the trends, perhaps I’ll report back in a future edition of the Chronicles on the progress!