It’s 2025 and AI powered developer tools have become an integral part of software development. More developers use AI assistants to get help with tasks like code completion, debugging or review. This means there’s a swift growth in this space that has brought many options ranging from IDE plugins to standalone editors, each offering unique capabilities.

In this post, I’ll share the tools that I use to enhance my development workflow as we explore some of the free AI tools and plugins. Hopefully this can help developers understand which tool might fit their specific needs. After all, choosing the right tool for a task can make a good difference to the software development experience.

Visual Studio Code has emerged as the clear leader among code editors, with a commanding 70% market share according to recent surveys. This dominance is especially evident in React and TypeScript development, where VS Code’s deep integration with these technologies makes it a default choice for most developers. Given this large adoption, the tools we explore in this post will primarily focus on VS Code extensions and workflows.

Interaction with Large Language Models

AI development tools offer several distinct ways to interact with Large Language Models (LLMs), each designed to support different aspects of software development. While some tools might have their own unique features, they generally fall into three interaction modes: Chat, Edit, and Agent. These modes represent different approaches to leveraging AI assistance, from natural conversations to direct code modifications.

I love using code editor based tools as it reduces friction while interacting with LLMs and all my interactions stay inside my editor sparing me from opening separate websites (ChatGPT, Gemini etc,.) and the back and forth copy/paste workflow from the websites. This integrated experience makes these tools more efficient to get AI assistance while coding, as they can access your codebase directly and provide contextually relevant help quickly. Let’s explore each mode in detail.

Chat mode

Chat/Ask mode lets you have natural conversations with LLMs and is my most common method of interaction. Think of it as having a buddy who’s always ready to converse with you and help. You can ask questions about your code, request explanations, or seek solutions to problems. By highlighting specific parts of the code sections or referencing specific files, you provide the AI with relevant context for more accurate assistance. The conversation flows naturally, and you can apply suggested code changes directly from the chat interface. With all conversations stored locally on your machine, this mode eliminates the need to context-switch between your editor and external websites.

Edit mode

Edit mode enables you to make targeted code changes in your editor. By highlighting code and describing the desired modifications, you get instant inline suggestions that you can accept or reject. It’s particularly useful for quick tasks like writing comments, generating similar unit tests, or refactoring functions. Think of it as a pair programming buddy who can make contextual edits to your code while you maintain full control over the changes that get applied.

Agent mode

Agent mode takes AI assistance to another level. Instead of just suggesting changes, it acts like a coding partner with direct access to your development environment. Think of it as having a helpful assistant who can:

- Search through your project files

- Make code changes across multiple files

- Run terminal commands to install packages

- Execute tests and help fix failures

It always asks for your permission before making any changes. This makes it perfect for tasks that usually require multiple iterations, like setting up a new testing framework or fixing a broken build.

All these actions happen with your oversight, so you maintain control while the agent handles the repetitive work. It’s particularly useful during bug fixes, where the agent can search through the codebase, identify similar patterns, and suggest comprehensive solutions.

Tools

Let’s explore a few tools that bring these interaction modes to life.

Github Copilot

Copilot was one of the first tools to introduce AI based autocomplete for writing code. Copilot integrates seamlessly with VS Code and other editors, offering code suggestions as you type. It excels at:

- Writing boilerplate code and common patterns

- Improving unit tests based on your implementation

- Completing functions based on descriptions

- Converting comments into code

Copilot learns from existing code and suggests solutions that match your coding style and patterns. The suggestions are inline, making it feel like an extension of your typing rather than using an external tool.

While Copilot offers chat, edit, and agent modes, I mainly use its autocomplete feature. Developers get limited free access to several premium LLMs from Anthropic (Claude), OpenAI (ChatGPT), and Google (Gemini). It also supports open source options like DeepSeek and Qwen. For developers concerned about privacy, there’s an option to use local LLMs as well.

Continue.dev

Continue.dev is a VS Code extension that brings AI assistance into your editor through chat, edit, and agent modes. What sets Continue apart is its flexibility in model selection - you can easily switch between different LLMs, including open source options. It’s easy to configure Continue to work with local models, open source alternatives, or premium tier models based on your needs and privacy preferences.

I primarily use its chat functionality as the interface makes it easy to ask questions about code, request explanations, or brainstorm solutions. The ability to highlight code sections and reference specific files provides the AI with relevant context for more helpful responses. But there’s no reason to use both Github Copilot and Continue and I would recommend using one of them as your interface with LLMs.

Coding agents

Beyond traditional AI assistants, agentic coding tools represent an evolution in this space. These tools can autonomously perform complex, multi-step tasks ranging from analysing your project structure, adding or editing code to executing commands on your terminal.

Cline was built on Claude’s agentic capabilities to handle sophisticated software development tasks. Cline excels at converting mockups into functional apps and fixing bugs through screenshots similar to Cursor.

Roo Code (a fork of Cline) functions as an autonomous coding agent that adapts to different roles through custom modes. Whether you need a coding partner, system architect, or QA engineer, Roo Code can adjust its personality and capabilities accordingly.

Kilo Code considers itself as a superset of both Cline and Roo Code by merging features from both codebases. It combines Cline’s MCP Server Marketplace integration and zero-configuration setup with Roo Code’s temperature control and multi-language support.

All these tools support multiple AI providers and can integrate with OpenRouter to access various models. I’ve had mixed success with these tools since the code they generate can quickly become complex for humans to understand. However, they’ve been successful at generating test suite code like unit tests and end-to-end tests.

More LLMs with OpenRouter

Managing multiple AI subscriptions can quickly become expensive and overwhelming. If you’re like me, you might find yourself wanting to subscribe to the paid plans of ChatGPT, Claude, and Gemini to access different models for various tasks. That’s where OpenRouter comes in handy.

OpenRouter acts as a unified gateway to 100s of LLMs, including premium models and open source alternatives, all through a single API. You get access to every model without juggling multiple accounts, billing systems, or API keys.

You can easily integrate OpenRouter with editor plugins like Continue or Github Copilot, giving you access to all the models. This approach is particularly useful for developers who don’t need constant heavy usage of any single model but want the flexibility to choose the right model for each task. Need Claude’s reasoning for a complex architecture decision? Switch to it seamlessly. Working on simple code completion? Use a faster, cheaper model like DeepSeek or Qwen. You might use GPT-4 for complex debugging sessions but switch to a lighter model like Llama for writing documentation or simple refactoring tasks.

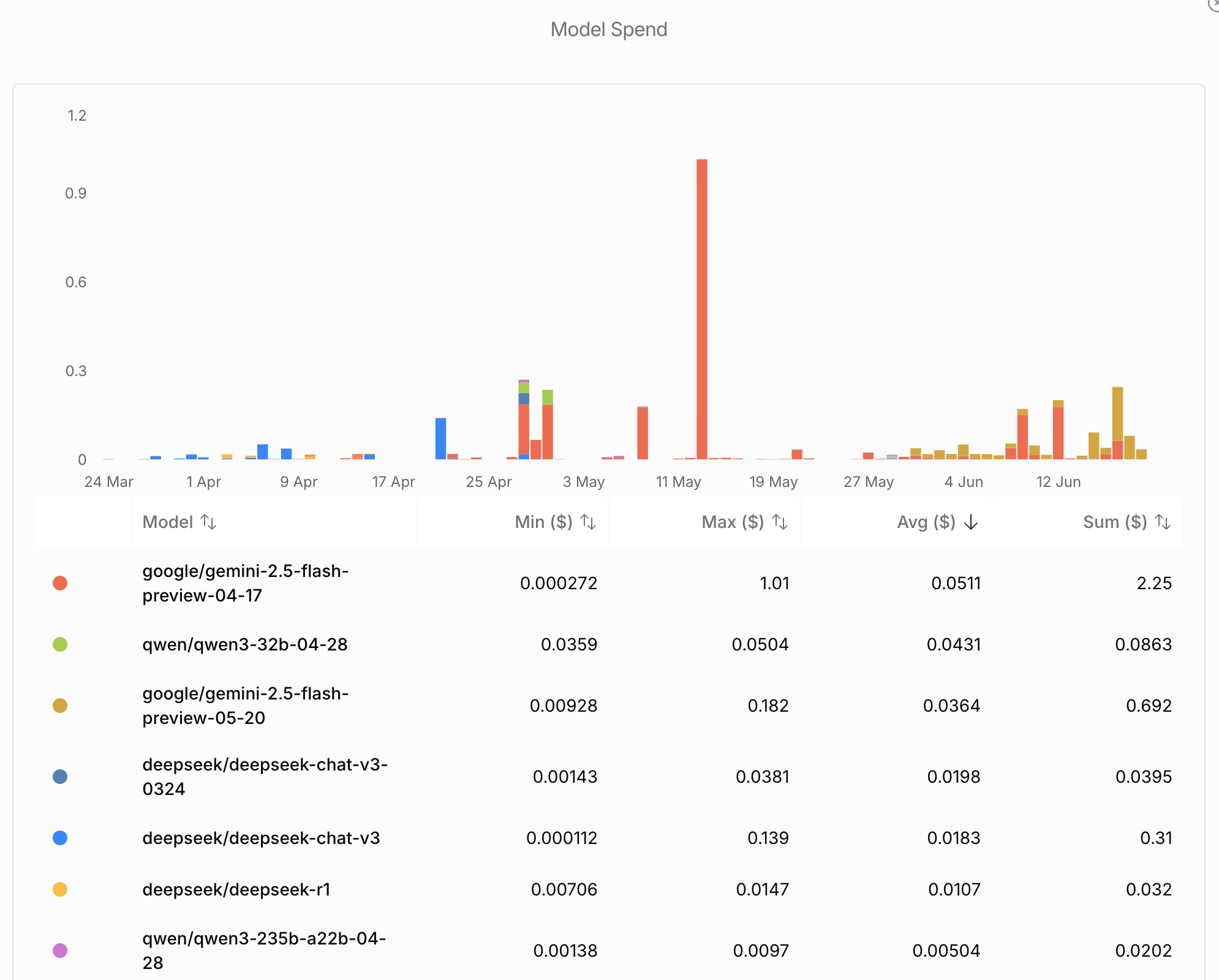

OpenRouter maintains a simple approach to pricing and privacy. Users pay the standard provider rates plus a small markup, while getting the benefits of multiple models, unified billing and analytics.

Conclusion

The specific AI tool you choose matters less than having it integrated directly into your development environment. When AI assistance is easy to access, you’re more likely to use it effectively and consistently.

The shift from subscription based to usage based models can feel uncomfortable at first. However, you’ll quickly discover that paying per token often costs less than maintaining multiple subscriptions, especially when you can switch between models based on your needs. This flexibility becomes valuable when you realise that using premium models for routine tasks can cost ~300 times more than using capable alternatives that achieve similar results. Smart model selection can turn AI from an expensive luxury into a practical, cost-effective tool.

The key is finding the balance and achieving the goal of AI assisted software development without breaking the bank.