This is the API-focused sequel to the Web-focused post. As before, the main take-away from this post is to always check return values. A corollary is to design the user experience to include error messages in a helpful manner.

APIs have errors too

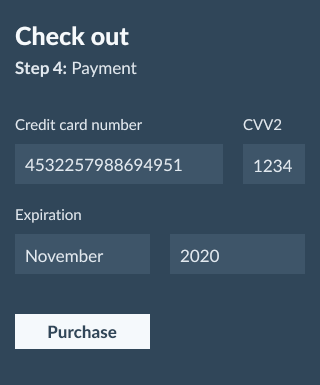

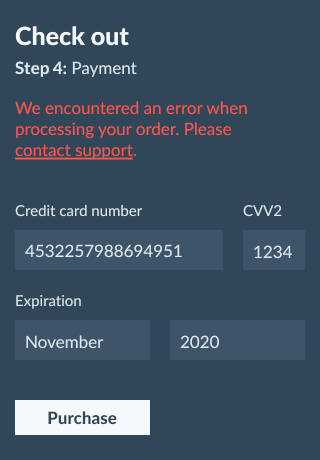

The second example is the more complex flow: sync and async with a service object behind an API. We need to report as many errors as we can – if we’re performing a synchronous task, we need to dispatch on its result – but also save space for errors further down the road. The UI dictates various pieces of communication, and we need to fulfill that communication.

As mentioned before this is a two-way street: the user experience team that builds the UI needs to work with the developers to understand what can go wrong. Sometimes the developers won’t find out until implementation is underway, and so the designers need to stick around and be prepared for an overhaul.

With an API there is a level of indirection: the UI team, mobile and other client teams, and backend team must work together to understand all that can go wrong and how the user should know about it.

Given what we know, we need to save the current checkout details, kick off a

background job, and report what we can. We’ll use a service object to wrap

some of these complexities; Checkout#commit saves the state and kicks off a

background job:

class Checkout < Mutations::BaseMutation

argument :input, Types::CheckoutInput, required: true

type Types::CheckoutOutput

def resolve(input:)

checkout = Checkout.new(input.merge(user: current_user))

if job = checkout.commit

{ checkout: checkout, job: job, errors: [], status: :in_progress }

else

{ checkout: nil, job: nil, errors: checkout.errors, status: :error }

end

end

private

def current_user

context[:current_user]

end

end

Checkout service object and call the method on it

(#commit). This returns an object for tracking the background job

on success; on failure it returns nil and sets errors on the

checkout instance. Finally we return either the checkout and job

objects with an in-progress status, or the errors with an error

status.This represents the “just before the view” point in an API. Let’s see what it takes to fill in the rest.

Playing with the full orchestra

Let’s start the service object by scoping out a naive implementation of our

Checkout#commit method. This has a bug!

class Checkout

extend ActiveModel::Naming

def initialize(args)

@user = args[:user]

@payment_token = args[:payment_token]

@order = args[:order]

@errors = ActiveModel::Errors.new(self)

end

##

# @return [ActiveModel::Errors] checkout errors

attr_reader :errors

##

# Advance the cart to the next step, preventing the user from double-checking

# out. Enqueue a job to complete the charge.

#

# On failure, sets errors on {#errors}.

#

# @return the [ActiveJob] instance, or falsy on failure

def commit

@order.update(state: :processing)

PaymentProcessorJob.perform_later(@payment_token, @order)

end

end

Checkout service obect takes the arguments from

the API: user, payment token, and the order we are purchasing. We make use of

Rails’ error objects via the ActiveModel::Naming module and the

ActiveModel::Errors class.

The actual checkout action (#commit) does two things: update the

order’s state, and enqueue a background job to communicate with the payment

processor. This method has a bug.The issue is that we’ve violated our contract: we need #errors to produce any

errors that we can generate synchronously. Our call to #update could generate

useful errors – for example, if the user tries to press “Checkout” in a stale

tab on an old cart – but we drop them into the bit bucket instead. Moreover,

we march forward on an invalid update, enqueuing the job to charge the customer

regardless.

By now we’re deep down the code path, far from the ERb view rendering this. Whatever decisions we made up the callstack are going to be hard to change now. This is often where people reach for exceptions. Note that a stale cart is not an exceptional situation; we’re reaching for an exception instead because we coded ourselves into a corner.

def commit

@order.update!(state: :processing) # changed from a non-raising #update

PaymentProcessorJob.perform_later(@payment_token, @order)

end

And honestly, I’d rather see #update! than an unchecked #update. At least

it’s not hiding a bug.

But the better solution – and, luckily, the actual design we had set ourselves

up for – is to leave the exceptions for the exceptional cases but to treat

a failed #update as something we can tell the user about.

def commit

if @order.update(state: :processing)

PaymentProcessorJob.perform_later(@payment_token, @order)

else

@errors.merge!(@order.errors)

false

end

end

#update returns

whether it succeeded. On success, proceed as before: enqueue a payment job. On

failure set the errors on ourselves and return false. This fulfills the

contract of the method.Let’s go into detail about our method calls.

As was discussed with #find_by, an #update can raise an exception on

database failures. These are programmer bugs, which we are letting bubble up.

It’s good that we’re considering these exceptions and recognizing how we want

to handle them.

Next up is the #perform_later method, part of ActiveJob. This is

documented to return an instance of the job class; it does this by calling

the #enqueue method, which returns the job instance, or false on failure.

This is exactly what we want out of our #commit method, so we can just return

that. Perfect.

Perfect? What if the order is marked processing, but the payment processor fails to enqueue? What if the underlying queue adapter raises (such as during a lost Redis connection)?

Let’s try to orchestrate these two error-producing things:

def commit

if @order.update(state: :processing)

enqueue_payment_job

else

@errors.merge!(@order.errors)

false

end

end

private

def enqueue_payment_job

job = PaymentProcessorJob.perform_later(@payment_token, @order)

return job if job

@errors.add(:base, :job_failed)

unless @order.update(state: :pending)

@errors.merge!(@order.errors)

end

false

end

Ick. How about we enqueue it first, with a delay, then cancel it ASAP if the

#update fails?

def commit

if job = PaymentProcessorJob.new(@payment_token, @order).enqueue(wait: 5)

if @order.update(state: :processing)

job

else

job.cancel

@errors.merge!(@order.errors)

false

end

else

@errors.add(:base, :job_failed)

false

end

end

No one said this was easy.

I’d like to subscribe to your error newsletter

This covers the synchronous errors, but now we have a background job that is about to make a network request. As the comic says, “oh no!”

We need to communicate these errors back via the API. Assuming GraphQL and a patient mobile development team, we’re talking about a subscription. We’ll listen for subscription requests by the job ID, and on job updates we’ll send back a job status.

class JobStatusChanged < Subscriptions::BaseSubscription

field :provider_job_id, Types::Integer, null: false

type Types::JobStatus

def update(provider_job_id:)

job_status = object

if job_status.success? || job_status.failure?

unsubscribe

end

super

end

end

provider_job_id in ActiveJob). When our job would

like to broadcast an update to its subscribers, it will communicate this to

graphql-ruby via a custom JobStatus object. If the status is a success or

failure – if it is finished – we unsubscribe the client; we will have nothing

more to say. Regardless we broadcast out the job status for the client (via

super).We need more infrastructure. Here’s the first pass of the background job:

class PaymentProcessorJob < ApplicationJob

##

# Capture the payment then notify the subscriptions about how it went.

#

# @return void

def perform(payment_token, order)

PaymentProvider.capture!(payment_token, order.total_price)

order.update(state: :completed)

notify_graphql(:ok)

rescue PaymentProvider::Error => e

notify_graphql(:fail, e)

end

private

def notify_graphql(status, exn = nil)

OurAppGraphQLSchema.subscriptions.trigger(

"jobStatusChanged",

{ provider_job_id: provider_job_id },

job_status_for(status, exn),

)

end

def job_status_for(status, exn)

case status

when :ok

JobStatus.new(success: true)

when :fail

JobStatus.new(success: false, errors: Array(exn.message))

end

end

end

JobStatus data class we

create.Our hypothetical payment provider offers a #capture! method to finalize the

charge, but it raises an exception. So here we have to decide: do we let this

exception bubble, or do we transform it into something else?

Our goal is to notify our API subscribers. We programmed ourselves into a corner again: an exception would float into the ether, logged into our exception tracker, disappearing from the user’s view. We don’t want that. So we handle the exception that we expect: rescue it and notify our API subscribers.

Astute readers will notice a mistake we’ve mentioned already, but there are two other errors that stand out to me.

We are using ActiveJob’s GlobalID mechanism to pass full objects through to

the job. If the Order object is deleted from the database before this job

runs (remember that five second delay at the end of the prior section?), this

will cause ActiveJob to raise an ActiveJob::DeserializationError. That

said, our Order objects are permanent; if one is deleted, this is a

programmer error, and should bubble up to our exception tracker.

However however!, we would violate our contract – to notify our API

subscribers – if we simply bubbled up; we need to notify along the way. Let’s

use rescue_from to handle all exceptions with a notification and then

re-raising:

class PaymentProcessorJob < ApplicationJob

rescue_from StandardError do |job, exn|

job.notify_graphql(:fail, exn)

raise exn

end

def perform(payment_token, order)

PaymentProvider.capture!(payment_token, order.total_price)

order.update(state: :completed)

notify_graphql(:ok)

end

end

rescue_from control method to notify

all subscribers about all exceptions, and then re-raise the

exception.The next issue that stands out to me is the fact that our payment provider is making a network call. This can lead to timeouts, DNS errors, TLS errors, TCP errors, and HTTP protocol errors. Those are most definitely going to come in as exceptions. Some of these exceptions mean we should try again in a minute, and some don’t.

The retry is a notable aspect often left out of UI-related async discussions. The user will have some expectation that things are going well unless they hear of an error. After a discussion with the product team, everyone agrees to let the API know when a job is taking longer than expected, to allow the mobile team to experiment with informing the user.

class PaymentProcessorJob < ApplicationJob

discard_on EOFError, Errno::ECONNRESET, Errno::EINVAL, Net::HTTPBadResponse,

Timeout::Error, ActiveJob::DeserializationError

retry_on Net::HTTPHeaderSyntaxError, Net::ProtocolError do |job, exn|

job.notify_graphql(:retry, exn)

end

private

def job_status_for(status, exn)

case status

when :ok

JobStatus.new(success: true)

when :fail

JobStatus.new(success: false, errors: Array(exn.message))

when :retry

JobStatus.new(success: nil, status: :retry)

end

end

end

discard_on and

retry_on control methods to describe what to do on various

exceptions. If we retry then we notify the subscribers with that fact, through

our JobStatus data object.With that in place, this example still fails to apply lessons from an earlier

section: we call #update without checking the return value. But what does it

mean for the #update to fail? Again, a corner case that did not come up

during the design.

For the #update to fail would mean that the customer is charged but the order

is in a processing state. It will appear in their list of in-progress purchases

– at this point, forever – but their credit card bill will reflect the

reality that they have paid for the product. And without moving to the

completed state, the fulfillment center will never see it.

The stakes are high, but luckily this specific failure is rare. The solution here can be as simple as notifying the customer support team but otherwise treating this as a success.

def perform(payment_token, order)

PaymentProvider.capture!(payment_token, order.total_price)

unless order.update(state: :completed)

Mailer.notify_cx_about_stuck_order(payment_token, order)

end

notify_graphql(:ok)

end

#update. If it fails, we notify our customer

support team and move on with life.Let’s leave the mail failure for the exception tracker. This allows us to complete the checkout flow just in time to end this article.

Conclusion

We followed two common examples – authentication and checkout – through all their error handling woes. Along the way we uncovered corner cases and found places where the design did or did not lend itself to helping the user through failure. The design dictated the implementation; thinking about errors up-front helped us build out a system for handling most of what can be thrown at us.

For each method we used, we thought through its failure modes. This included its return value, its documented inputs, and its exceptions both known and undocumented. We bucketed failures into user-fixable, user-visible, and programmer error, and we handled each bucket differently.

Following this practice exposed a stable series of patterns that will reduce the growing noise of exceptions accumulating in the exception tracker and also reduce the number of bugs hiding for users to discover after the next refactoring.

With a little forethought and discipline, our users can have a more informative and stable app experience.

(Thank you to Eric Bailey for his wonderful UI mocks used throughout this post.)