There’s a really cool way of operating your computer buried deep in macOS’ System Preferences.

Head Pointer was first introduced in a beta for macOS Catalina. Like its name implies, it “allows the pointer to be controlled using the movement of your head captured by the camera.” If you’re using a Macbook or an iMac, good news! It already has the camera you’ll need in order to try this out installed.

Before I teach you how to enable this, I figure I could show you it in action:

In this video I am using the motion of my head, as well as discrete facial feature movements to browse the thoughtbot.com website.

Why does this exist?

Not everyone uses a mouse or trackpad to use a computer.

There are many of permanent and situational motor control disabilities that may prevent you from using your arms or hands. They range in severity from things like a permanent spinal cord injury or Cerebral Palsy to Arthritis or circumstantially being prevented from using your hands.

There is also a range of assistive technology options to help people experiencing these conditions. Head wands, mouth sticks, switch controls, voice recognition software, and, yes, you guessed it, face tracking.

What can I do with it?

Pretty much anything you can do with a mouse or trackpad you can do with Head Pointer. And that’s the, er, point. At the heart of accessibility is the notion of equivalency. Someone should be able to use your product regardless of their device, ability, or circumstance.

How can I design for it?

You don’t need to redo your entire UI to accommodate this kind of input mode. In fact, that’s sort of the point.

Since you can’t know who your end user is or what their circumstances are, you want to proactively accommodate the widest range of input types and situations. To do this, you can reference the Web Content Accessibility Guidelines (WCAG), an international standard that defines what constitutes an accessible experience.

Thinking about the experience of using your head to control a pointer, we know that it’s less precise movements than using your hands. In addition, holding your head at an angle for a sustained period can be uncomfortable, or even painful.

To accommodate these concerns, we want things like larger touch targets that are easier to hit, with some spacing between them to prevent mis-clicks. We also want to remove things that rely on timing or countdowns, since it might take more time than anticipated to line the pointer up. Tooltips that appear after a delay are another example, as they may appear and cover the content someone is actually trying to reach.

These aren’t arcane technical concerns, or edge-case design considerations. They do, however, map back to two WCAG rules: 2.5.5: Target Size and 2.2.3: No Timing.

How do I enable it?

Glad you asked! If you are using macOS Catalina (version 10.15) or later:

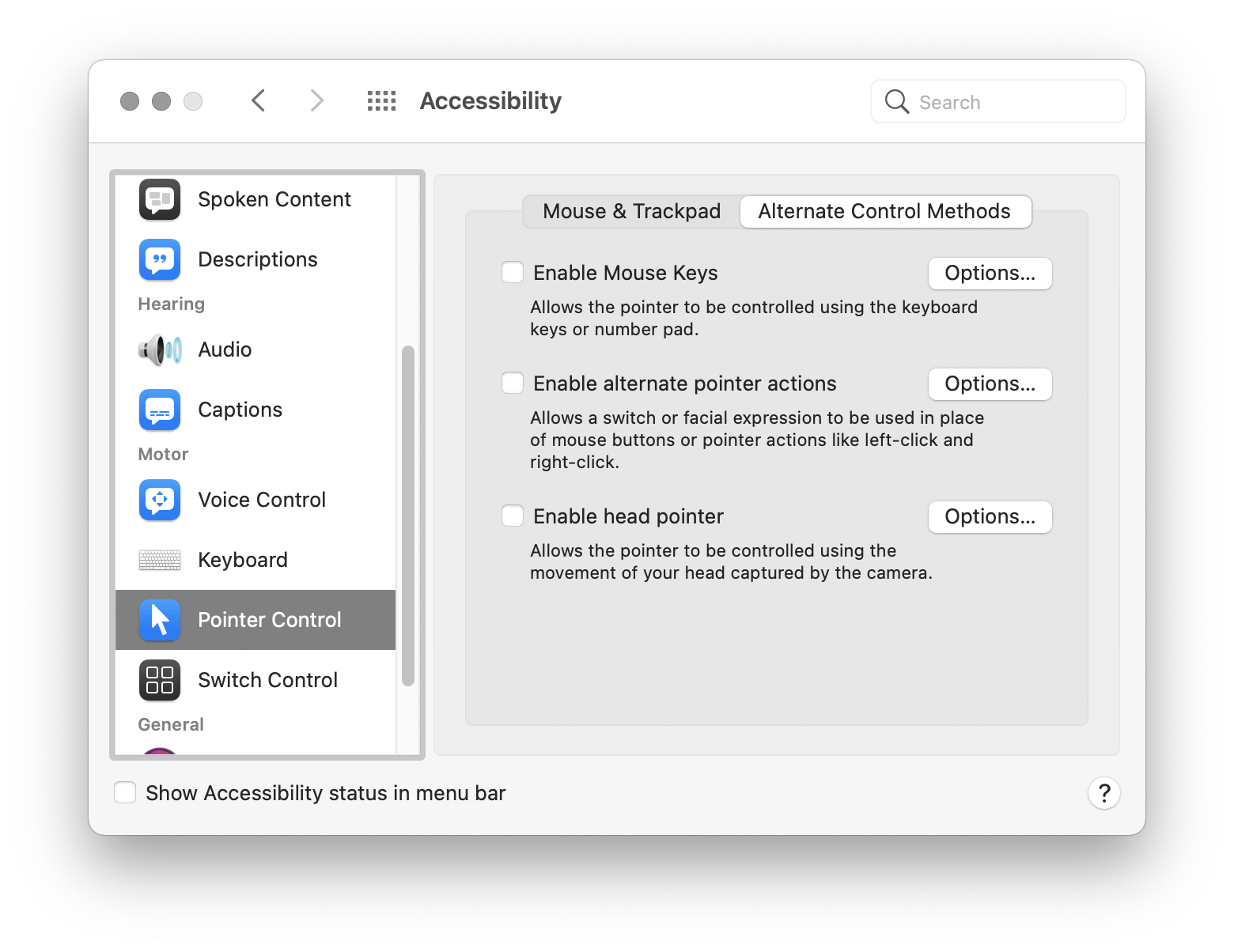

- Go to System Preferences.

- Go to Accessibility.

- Scroll down the sidebar to go to Pointer Control, located in the Motor section.

- Go to the Alternate Control Methods tab.

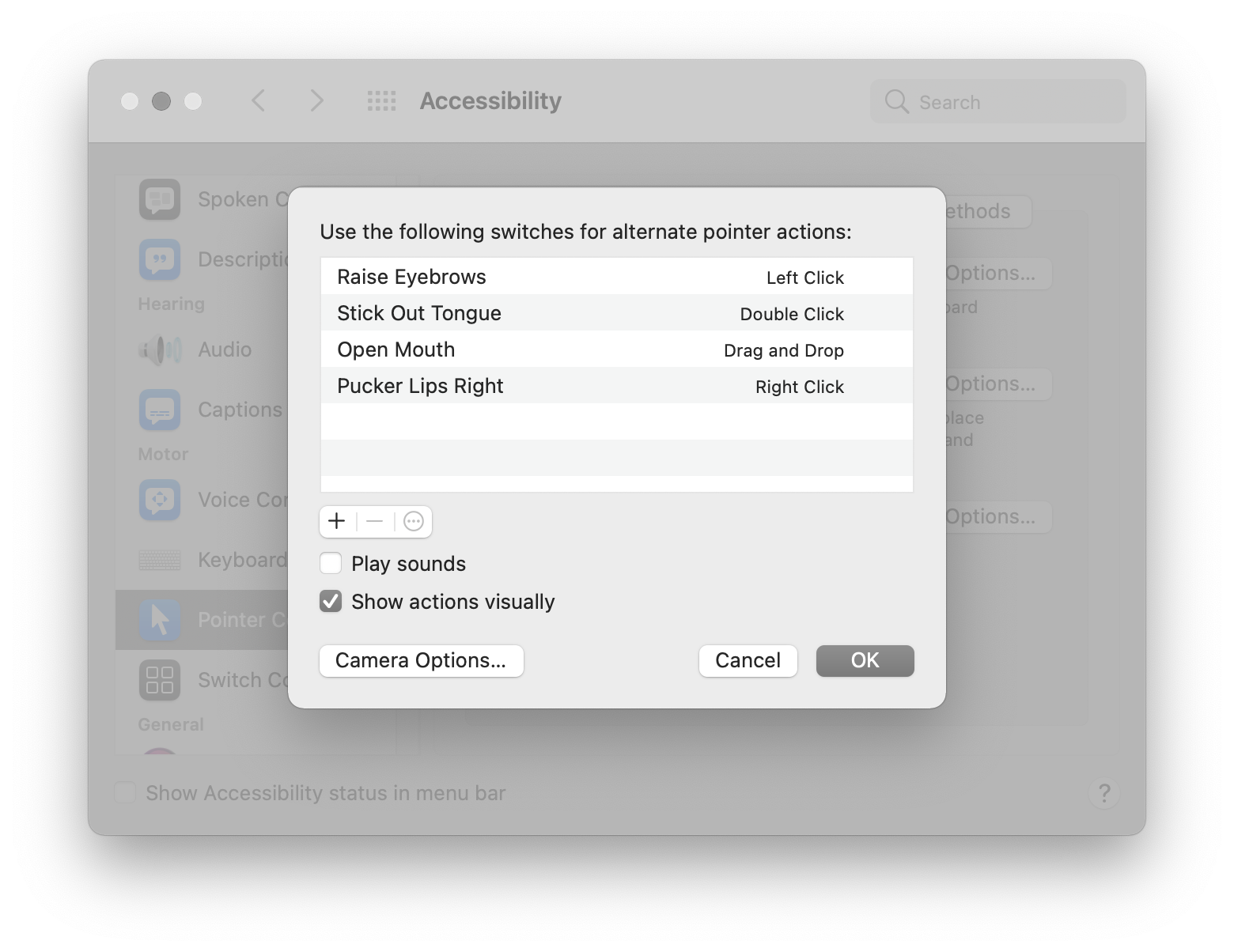

You’ll then want to open options for Enable alternate pointer actions. Here, you can define what facial expressions you want to use for what actions. This is the setup I’m using:

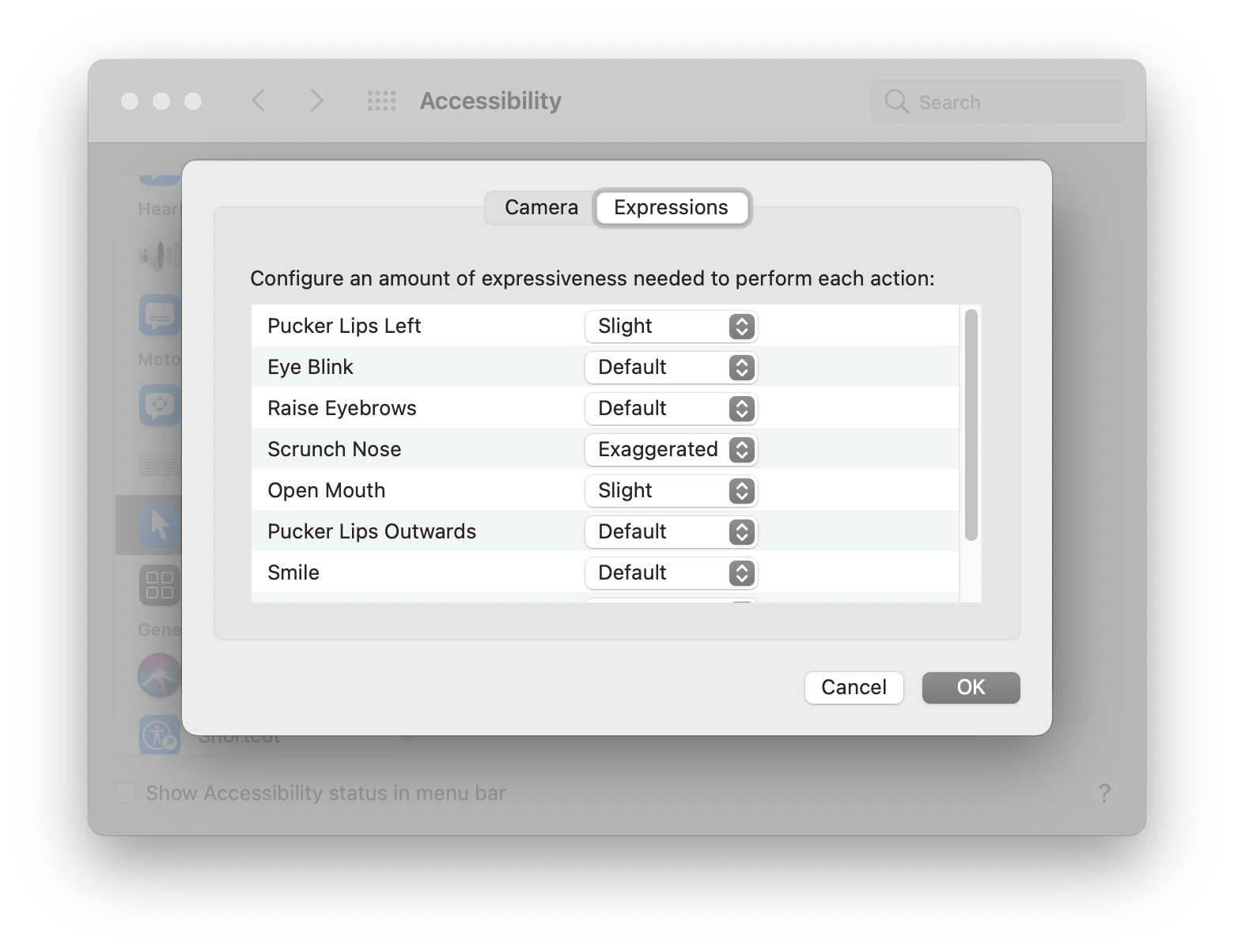

You can also specify what camera to use (defaults to the FaceTime camera if present), and importantly, the level of facial expression expressiveness required to trigger an action.

Because the field of facial detection is rife with algorithmic bias, especially with regards to both race and disability, it’s great to have options to work with.

These kinds of controls allow someone to tailor the experience and shape it in a way that works for them, without explicitly knowing what their needs are. It provides mechanisms to accommodate dark skin tones, partial facial paralysis, partial obscurement, piercings, tattoos, and facial hair—all things that have traditionally affected facial recognition software.

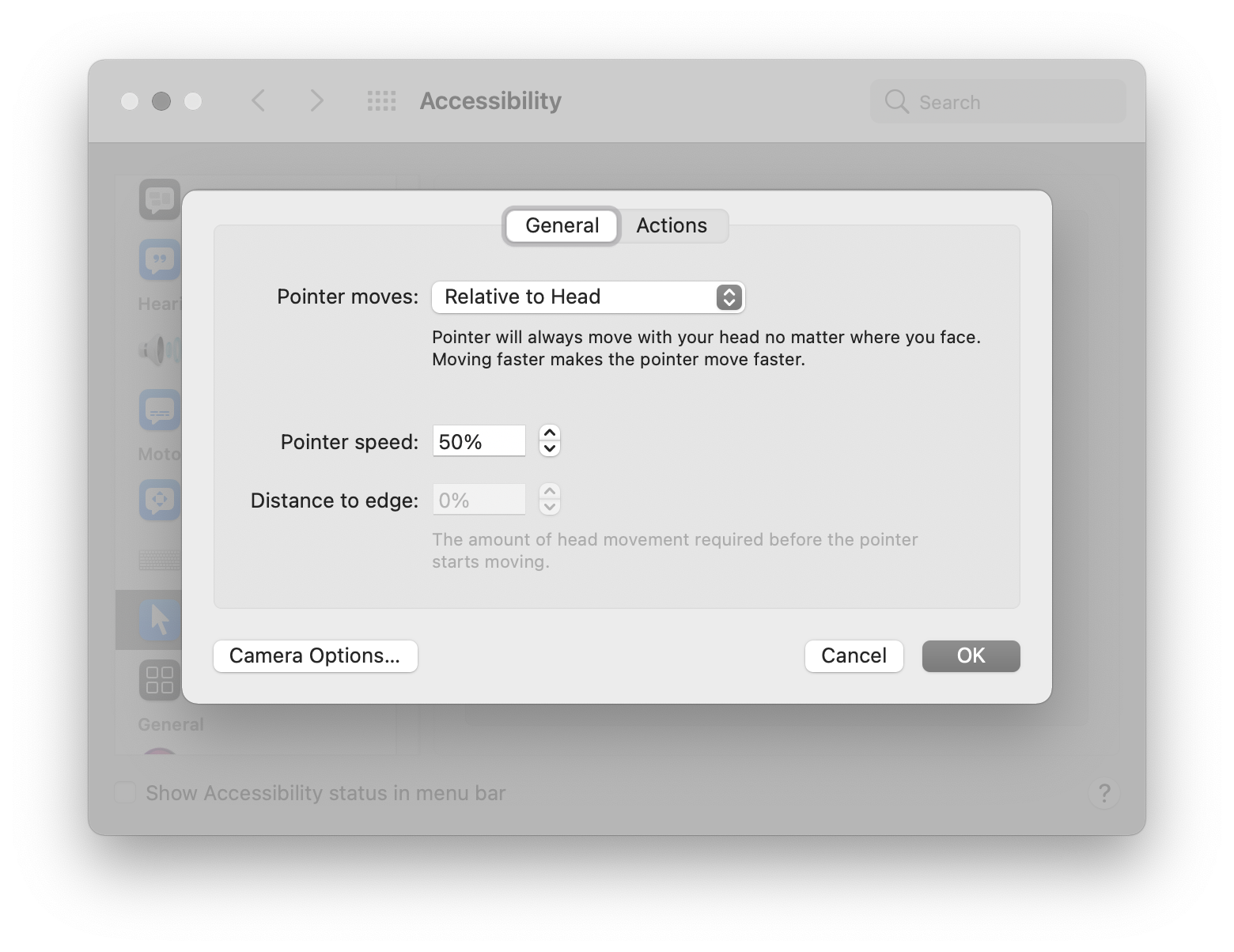

You may also want to check out the options for Enable head pointer. Here’s what I’ve set mine to:

Once everything is configured to your liking, check both Enable alternate pointer actions and Enable head pointer. You should now be able to remove your hands from the mouse or trackpad and move your head around to move the mouse!

You can disable this interaction mode by unchecking Enable alternate pointer actions and Enable head pointer. You should also be able to use your keyboard, mouse, and trackpad while activated, as well. This is a great example of multimodal interaction.

My thoughts

I really enjoy this feature, and I appreciate that it is Apple doing it.

Operating systems are the foundation we build a lot of our personal and professional lives on. Because of this, I think it is vital for them to be as accessible as possible. Features such as this go the extra mile to accomplish that, and push against the myth that accessibility is just blind people.

It’s also important that it happens on the operating system level, in that this kind of thing needs to affect literally everything you can do on a computer, not just a single program or suite of apps. Consistency in interaction this regard is also vital—it would be a nightmare if every app tried to do their own version of this.

Setting aside the astounding cost of Apple hardware for a second, this feature is also free. You don’t have to research and buy specialized software and pray it works the way you need it to. Windows ships with similar functionality, but you need to buy hardware to get it working. Google? Well, I have a lot of trust issues, especially given the importance of privacy in the context of disability.

Zooming out a bit, this kind of functionality also digs into the core of usability, which is removing barriers and friction when operating a digital device. Have you ever been in the middle of a flow state and glanced at a window, expecting it to come into focus without clicking on it? That’s exactly what this kind of functionality is pushing towards.

Multiple ways

You might be thinking that operating a mouse without using your hands is cool, but are left wondering how to type without using a keyboard. Well guess what? In the next post in this series I’ll cover how to do just that!